During the development of our second prototype “Shepherd”, we realised that permissions for truly distributed social media are a thorny thing. Within the decentralised design of Solid, we have to define how spaces are controlled in interactions between users. We also have to be mindful of preserving the context of the interaction, while also respecting the privacy of individuals who might belong to different networks, technical or otherwise.

Three models of organising content

To explain the whole issue, we need to lay some groundwork on what design decisions have been made, here at Darcy, and for Solid as a whole. Those design decisions define how users can control the spaces of interaction. Let me start with how posts and comments are modelled. All in all, there are three major models of how to structure content and reactions to the content in social media:

1. The Forum model

This assumes that someone sets up a space for conversations. That person (or group) takes ownership of the space and can usually control the terms on which people interact in that space: Who is allowed to post, what gets deleted, or who is allowed in the space. Inside that space, people can start a conversation around a topic and others can reply to it.

We see this in web forums that run for example the venerable phpbb or Simple Machine Forums, or, more modern, Discourse forums.

When it comes to permissions, forums are usually managed by either an individual or a group of “admins” who then set out the rules of who can see, write, react to, or even delete things. The individual user then rarely has to think about permissions at all.

Those administrators can remove whole conversations or just individual replies, although the latter can leave the remaining content stripped of context.

A byproduct of this model is that most forums are somewhat of a close-knit group. They foster a group-identity, and often everyone knows who else is around.

2. The Posts-and-Comments model

In this model, everyone can post content and then others can react to it – be it through emoticons, likes, or comments. We see this model very often, it is used by Facebook, YouTube, Diaspora, and countless other systems.

The upside of this model is that it gives everyone who initially publishes content can usually set fine-grained controls of who gets to see the content and who can react to it. These permissions then seamlessly apply to the reactions and comments attached to this particular content. This means that those who comment on something have usually no control over who gets to see their comments or reactions – they are visible to the same people who can see the original content.

When the original post gets removed, this usually removes all of the comments and reactions to it too. Comments therefore only exist in the context of the initial post.

As far as I know, most of these systems do not tell the commenters who exactly is able to see the original post and consequently the comments. Most often, everything is public. A lot of those systems also have a “visible to friends-of-friends” setting, so you can reach out to people you do not actively know, but still be sort of private.

3. The Everything-is-a-Post model

Twitter and its open source derivate Mastodon feature this model. Instead of having a thorough hierarchical structure between original content and the reactions to it, comments are modelled as original content of their own. If you reply to a tweet or toot, that reply is its own new and original content.

This has interesting effects: Replies can be the starting point of their own conversations, completely decoupled from the original starting point. Even if that original post gets removed, the rest stays untouched and can stand on its own. But it also gives a somewhat disjointed user experience, as people often find it hard to correctly piece together the whole conversation. And when people who reply to an original post limit who can see their content, others might only be able to see a portion of the conversation.

Another side effect is the lack of noise control. “Sliding into ones mentions” can range from being just slightly impolite to outright harassing. The larger the network gets, the harder it becomes to control who can partake in a conversation without limiting oneself.

Why we chose the second model

Darcy makes use of the Posts-and-Comments model of organising these things. We find this to be the best compromise between discoverability, self-moderation (as the original poster can remove comments attached to their post) and data sovereignity (the comments are still stored on the commentors Solid pod, even when unlinked from the original post.

Distributed content and permissions

In Solid, the permissions to a piece of content are set alongside the content, on the Solid pod where they are stored. As a quick reminder, this is how it looks if Alice posts a picture, and Bob comments on it, we have the following data structure:

- Alice’s Solid pod

- Picture

- Link to Bobs comment

- Picture

- Bob’s Solid pod

- Comment on Alices Picture

- Link to Alices Picture

- Comment on Alices Picture

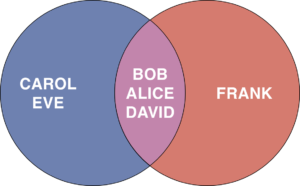

Now, Alice and Bob can freely decide on who gets to see what on their own pods. Alice might decide to show their picture to Bob, Carol, David, and Eve, while Bob has set his comments to be visible to Alice, David, and Frank.

Frank can now read a comment, but would not be able to see the original post (Alice would need to have set visibility for Frank too).

Carol and Eve can see Alice’s picture, however they cannot see Bob’s comment underneath.

In the interest of having a non-disjointed conversation, we need to solve this. And when thinking of the solutions, we have to imagine that an imaginary attacker has a a good grasp of technical knowledge, and at the same time assume that the potential victim does not. That way we cover the broadest range of scenarios.

Possible solutions

The easiest solution is to simply have everything public, but that is clearly not working long term. People rightfully want to have the option to only have their content show up for a specific audience.

Solid supports two different approaches, both of which have up- and downsides:

Option 1: When commenting on a post, the commentator copies the permission list from the post and applies that to the comment. This has the advantage that the same set of people who can see the post can also see all the comments. The downside is that it exposes the whole (or parts of the) contact list of the original poster to all commenters. This is obviously fine in a close-knit group, however it may not be fine in larger groups. If Alice makes a post visible to all of her friends, family and coworkers, do they want Bob from accounting to know the names of everyone in that group, just by looking at the metadata of the post?

Another downside is that if Alice later on alters the permissions on the original post, one would need to alter these permissions on all the comments too, otherwise newcomers to the post could not read all the comments. But there currently is no mechanism to apply those changes to the Solid pods of all commenters.

(On the other hand, this second downside could be read as a feature: After all, Bob posted his comment under certain conditions. If Alice changes the conditions later on, then it might make sense that these conditions don’t apply retroactively, because Bob didn’t consent to that. Of course, that implies that Bob took notice of who could read the original post in the first place. And, for example on a site like Facebook, Bob might not have such fine-grained information in the first place.)

Option 2: All comments are always set to public while the initial post remains subjected to the permissions set by the initial poster. This isn’t as bad is it initially sounds. Due to the data structure of Darcy, the only way to access a comment is by either being the owner of that comment, or by knowing the exact path and filename of the comment. This path and file name are near-randomly generated by the unique IDs of the original post and the comment. Doing things this way somewhat contextualizes discoverability.

So when accessing a post, it will come with a list of URLs to the comments that are linked to that specific post. That means that only those who have permission to read the post get to know the URLs of the comments.

The downside is that if someone leaks the URL to a comment, everyone who receives that leak can access that comment. Anyone who can read the post can get to those comment URLs. One might argue that people could do that with a screenshot or copy-pasted text too, but with those, one can always claim that they are faked. In contrast, a comment loaded from my own pod is clearly created by myself. This makes such a leak in theory much more sensitive.

Ideal Option 3: The ideal solution from our point of view would involve cryptography and an extension of the current Solid protocol. There would be no access control list of WebIDs attached to a comment. Instead the Solid pod where the comment is stored on will rely on a cryptographic certificate that proves that the WebID trying to access the comment currently has permission to access the original post. Only then will access to the comment be granted.

In the above example, Eve reads the original post on Alice’s pod. She sees a link to Bob’s comment and follows it. In order to load the comment, Eve’s client first loads of timestamped certificate from Alice’s pod that has a signature from Alice and asserts that Eve’s WebID is allowed to see the post. Bob’s Solid pod can then verify the signature, the timestamp and Eves WebID, giving Eve access to the comment.

This would keep both the comment as well as the whole of Alice’s contact list confidential – Bob will only know about those people who actively try to access his comments.

Why is this a problem specific to Solid?

In a centralised system like Facebook for example, this is actually a non-issue. All permissions are managed by the same central instance. That means that this central instance (and those who control it) is the single source of truth about which permission set is visible for whom in the user interface. Thus the central instance acts as the neutral trusted arbiter, making sure that the permission sets for posts and comments are in sync without leaking any information.

The highly decentralised nature of Solid is what makes this a problem in the first place, as the different actors need to synchronise each other while making sure to only transmit a minimum of information.

Conclusion

Alas, option three is, even though we think it would be best, currently way outside of the Solid specification. We had a lot of internal debate about which of the theoretical harms of options one or two would be worse.

In the end, I consulted with a few privacy experts and internet activists, and the unanimous vote from them was that the exfiltration of contact lists through option 1 would be way worse than potentially being critized for something you really did write on the Internet. This means that instead of for example relying on a (potentially forged) screenshot as proof of what someone has written, people who have access to the comment could now forward a link to the comment to others.

So we will adopt option 2 for now: Comments are formally public, but only addressable from the original post. To mitigate harm, we make sure that those who use Darcy will know that their comments are potentially public, if someone leaks the link to outside of the group. That way, users can take this into consideration when writing a comment.

For us, this was a very interesting challenge. It shows that there are a lot of tricky problems and opportunities when we commit to decentralised spaces. Any social media concept needs to spend a lot of time to think about the various levels of privacy, consent, information, ease of use, and other factors. And we need to make the outcome of these deliberations clear to those who then use the platform.

At the time I am writing this blogpost, the Parler hack happened. This prompted me to make another conclusion: Decentralising the social network through Solid, making sure to handle permissions and discoverability right, makes it a lot safer against this kind of data breach. There is no single point of entry that can be exploited, and no single service that would take the whole network down if compromised.

Of course, a lot of that changes once we introduce the moderation services, as those could in theory become an attack vector, but we do plan to implement them properly and safely.